papers_we_read

An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion

Rinon Gal1, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H. Bermano, Gal Chechik, Daniel Cohen-Or ICVR 2023

Summary

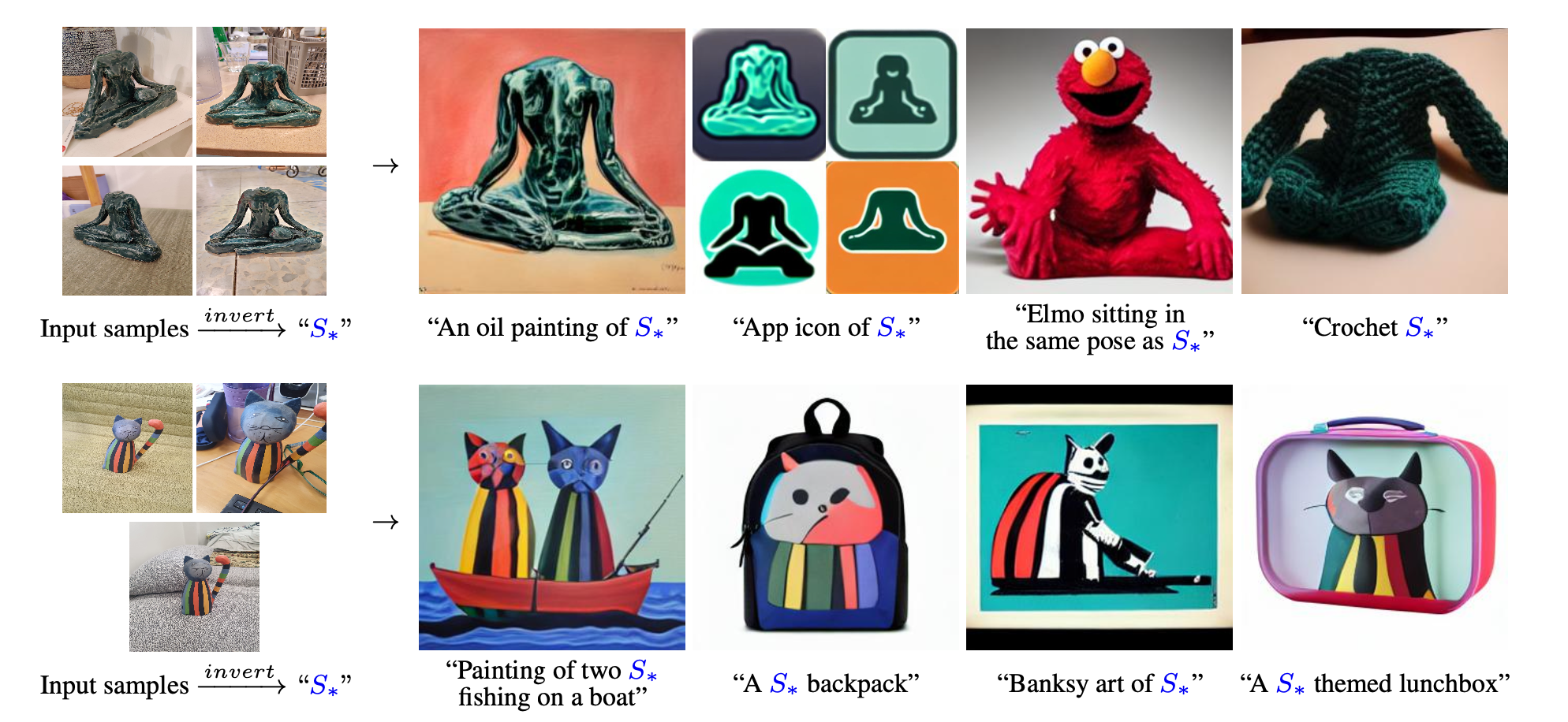

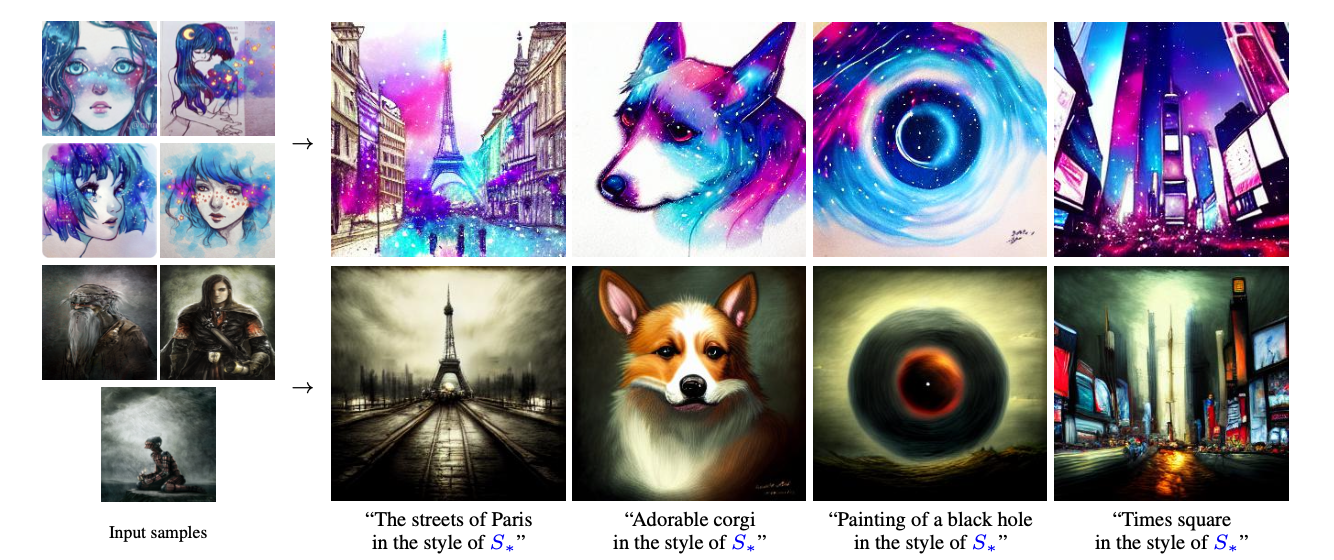

The paper introduces a method which enables users a high level of creative freedom in generating images from natural language prompts by finding new words in the textual embedding space, represented by pseudo-words which helps in introducing new specific concepts into text-to-image models.

Contributions

-

Addressing the challenge of large-scale text-to-image models which are constrained by user’s ability to describe desired target through text, by introducing personalized text-to-image generation.

-

Introduction of ‘Textual inversions’ as a method to find pseudo words in embedding space for capturing semantics and visual details.

-

Address the challenges associated with fine-tuning or re-training models for each new concept while preserving model’s existing capabilities.

Methodology

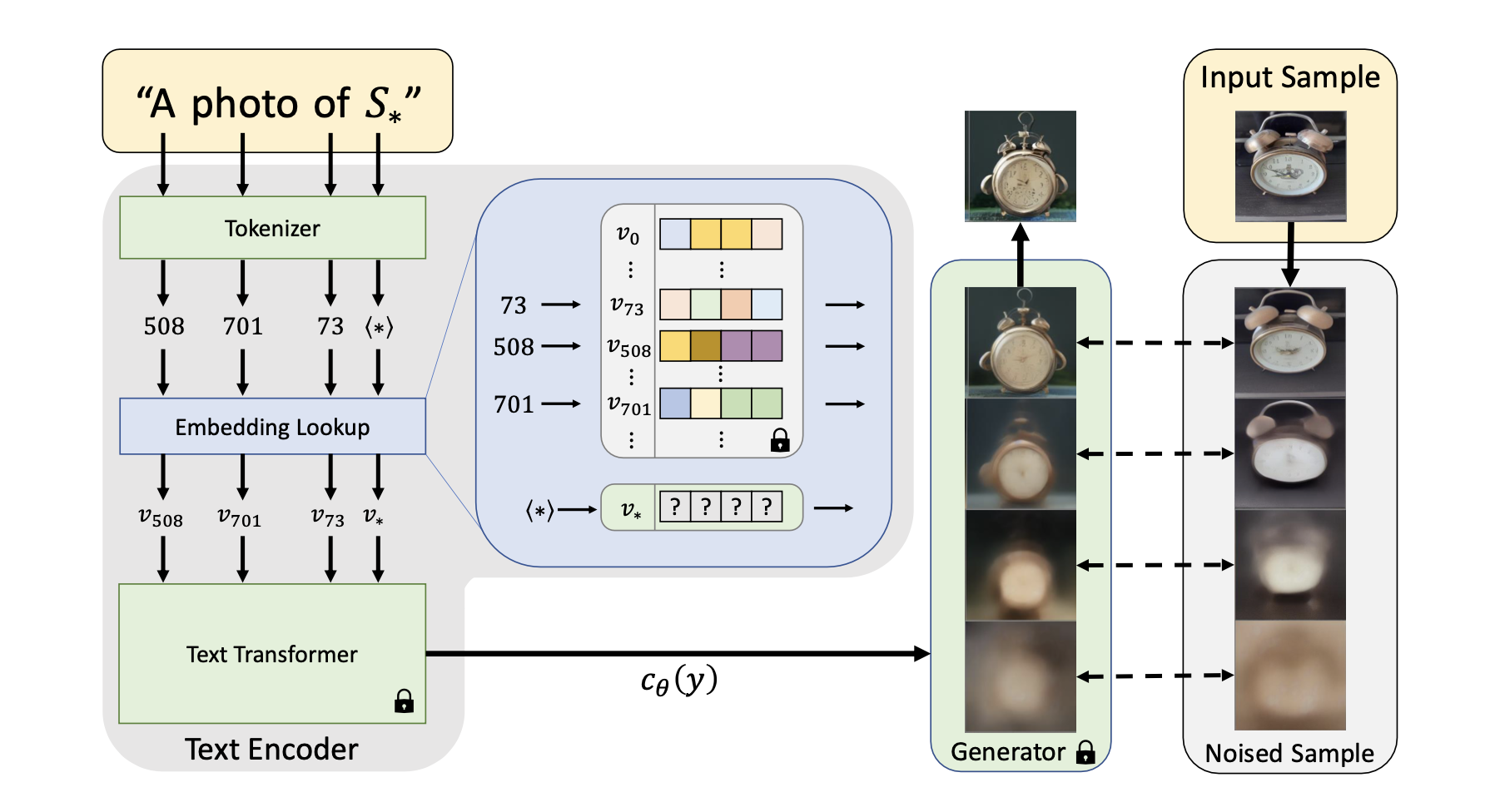

The primary challenge being addressed in the text is the difficulty of introducing new concepts into large-scale text-to-image models. The common challenges faced include the high cost of re-training models with expanded datasets for each new concept and the problem of catastrophic forgetting when fine-tuning on few examples. The proposed solution aims to tackle these challenges by introducing a method called “Textual Inversion” which works by transforming specific concepts into new made-up words. These made-up words can then be used to describe and create new scenes using simple sentences, making it easy for users to make changes.

The approach looks at the word-embedding stage of the text encoders which focuses on visually reconstructing images ensuring that the generated pictures match the desired concepts more accurately.

Results

The method is tested on Latent Diffusion models and the extensive experiments shows the effectiveness of proposed approach in capturing and representing concepts using natural language, especially with a single word and offering good flexibility.

Two-Cents

-

The paper provides more freedom in creating images from words. It’s good at capturing the general idea of a concept, even though it might not be super precise with details.

-

This method makes it easier to edit pictures, but there’s a risk that people might use it to create fake images, especially of private individuals. This suggests a need for more research in this area to understand and address these potential misuses.

Resources

- Link to Paper and Code

- YouTube video

- Blog